Data Engineering Services

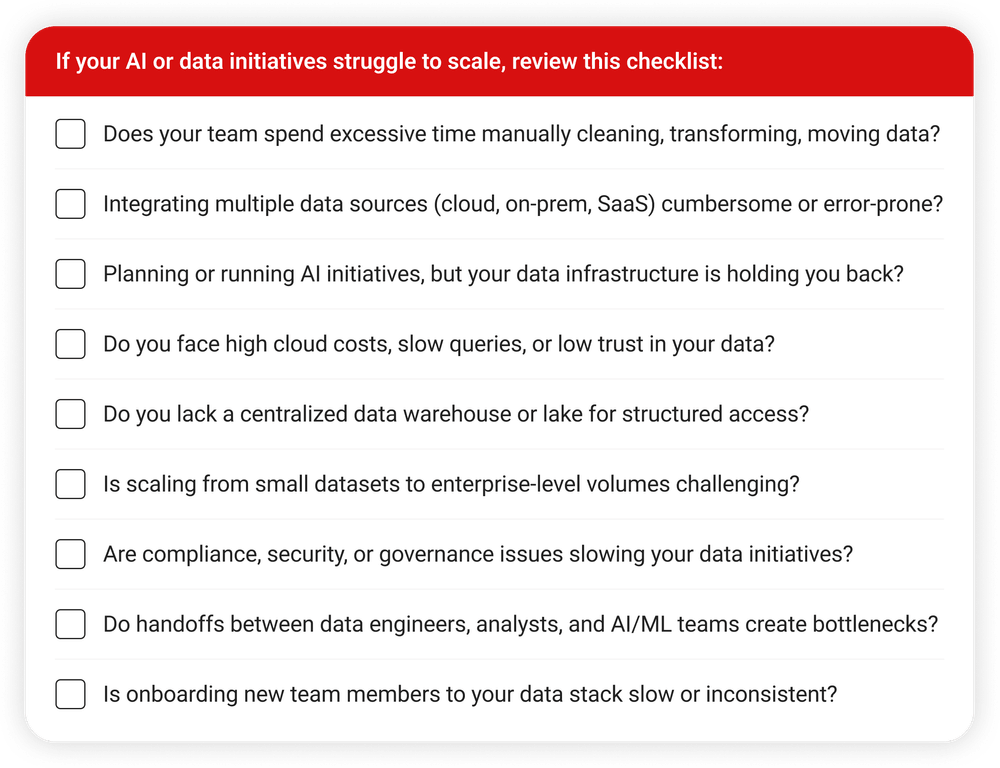

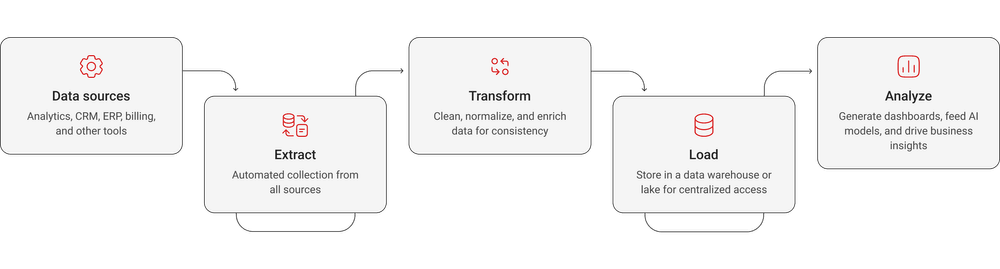

Automate your data pipeline with data engineering services. Get infrastructure for automatically processing your data into data warehouses or data lakes.

years of custom software development

years of AI & DS expertise

in-house employees