When I first looked at the system, nothing suggested a problem. Accuracy stayed high, uptime was stable, and benchmark results landed exactly where we expected them to. From the perspective of dashboards and reports, the system appeared complete and dependable.

By that point, the system had already been running in production for several months without incident. I’ve reviewed similar systems in EdTech before (especially adaptive learning and decision-support tools), where clean metrics initially masked deeper alignment issues. This was one of those cases, and it’s worth sharing how it unfolded in practice.

Where user experience diverged

The decision support module behaved predictably. Data pipelines ran cleanly, models produced consistent outputs, and the platform operated without interruption – no incidents, no regressions, no alerts.

This stability held across multiple release cycles over roughly a quarter. From a technical standpoint, it looked like a textbook example of a “healthy” AI system.

What made me curious was how people interacted with the system during everyday work. The module sat directly inside an enterprise planning and prioritization workflow. Its recommendations were meant to guide real decisions, not just inform them.

Over time, I noticed that users rarely took those recommendations at face value. They paused, checked, adjusted, and sometimes bypassed them altogether.

These interaction patterns became noticeable only after several weeks of consistent daily use. I’ve seen this behavior before in learning platforms, where human judgment quietly compensates for what the model cannot see.

This behavior clustered around specific situations – periods of rapid change, edge conditions, or operational constraints that the system didn’t explicitly represent. Occasionally, the model was producing answers that assumed a more stable and predictable environment than the one users were actually operating in.

In practice, these scenarios unfolded over short operational windows (sometimes hours or days) well outside the model’s learned time horizon. It reminded me of a curriculum that works perfectly in a controlled classroom, but starts to fray once real students bring their own pace and constraints.

Accuracy stayed strong even as decision usefulness varied depending on context. The longer the system stayed in production, the clearer that gap became. It took several production cycles and repeated decision reviews over a few months for this divergence to become undeniable.

Like a map that is accurate in scale but outdated in terrain, the model was correct without being situationally aware.

How the situation unfolded

The impact was limited to a single enterprise client, but the setup made the issue impossible to ignore. The AI-assisted module sat at the center of daily work evaluation and prioritization.

Within weeks of rollout, it became embedded in routine planning – less like a reporting dashboard, more like a pacing guide.

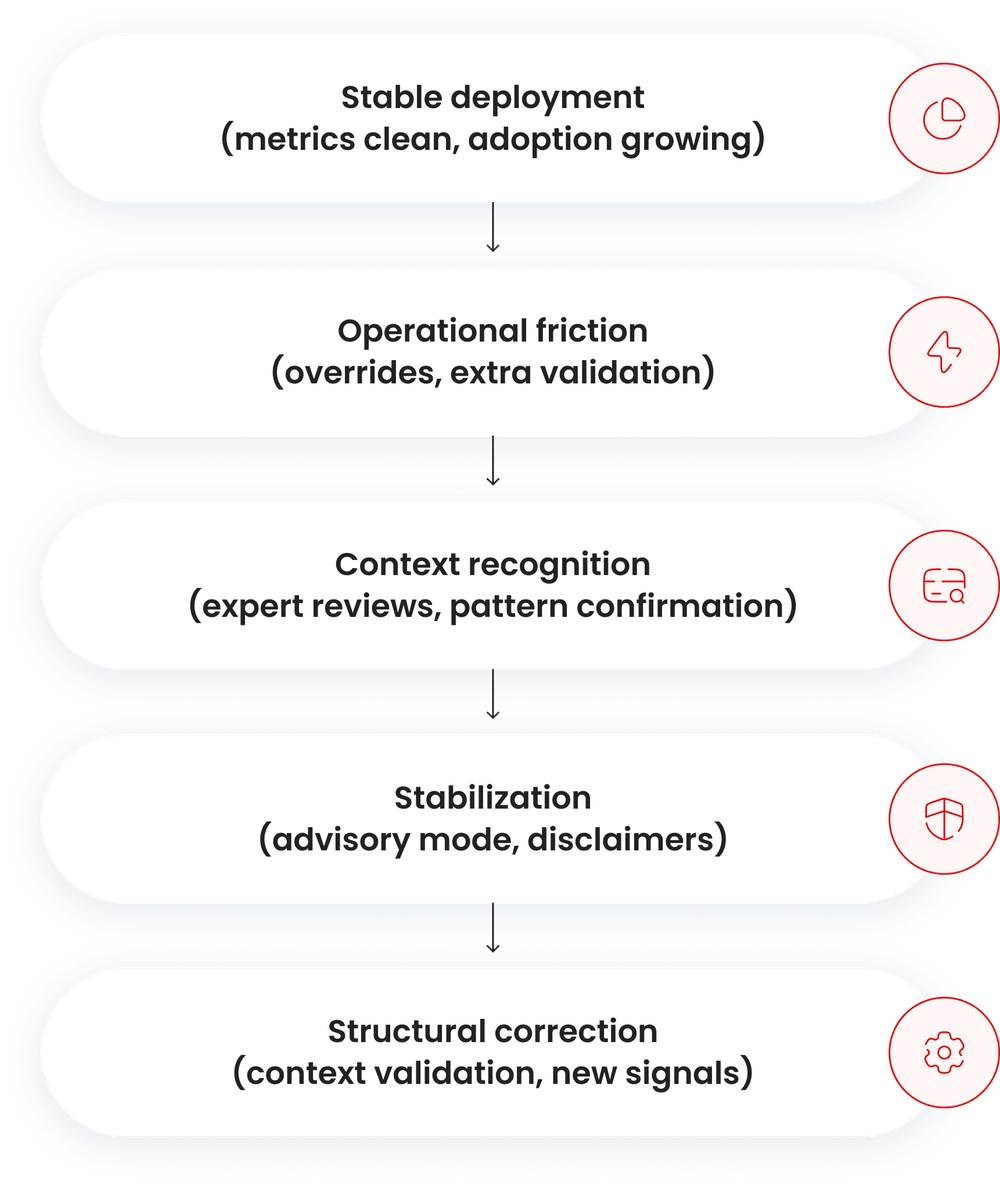

Technically, everything remained stable. Infrastructure performed as designed, reliability metrics held steady, and nothing failed. Yet within that stable system, a quiet friction began to build between the model’s recommendations and operational reality.

From a technical standpoint, everything looked correct:

- recommendations remained consistent

- predictions stayed statistically sound

- offline evaluation metrics showed no degradation

From an operational standpoint, something else was happening:

- users overrode recommendations in specific scenarios

- teams spent additional time validating decisions outside the system

- overrides concentrated around dynamic or constrained conditions

I didn’t interpret this as a loss of trust in the model. Users trusted the logic. They just recognized its limits.

Over time (after a few weeks of hands-on use), they learned when to slow down and double-check. As educators often do, they treated the system as a strong assistant, not as the final authority.]

As a result, the system met every SLA and reliability target, while perceived usefulness dipped in precisely the situations where support mattered most.

How the issue became clear

Automated monitoring never identified the issue. Metrics stayed within expected ranges. I only understood what was happening through structured feedback sessions and expert reviews with people who used the system daily.

These review sessions took place over multiple iterations across several weeks, allowing patterns to repeat and confirm themselves.

Years of working with teachers, instructional designers, and operations teams taught me to take this kind of feedback seriously, even when numbers look reassuring.

Different domain specialists described the same experience in different words. The logic made sense, but the assumptions didn’t always match how decisions unfolded in practice. That consistency across perspectives told me this wasn’t a usage problem or a training gap. It pointed to a limitation in how the system reasoned about context.

How the team closed the gap

Once I reframed the problem around decision context rather than prediction quality, the path forward became clearer. I stopped asking whether the model was accurate and started asking when its recommendations should apply.

We introduced several immediate measures to reduce friction while we worked on bigger changes:

- we shifted affected recommendations into an advisory-only mode

- we made assumptions explicit in the interface rather than implicit

- we ran joint review sessions with domain experts to align expectations

These interim measures were designed and rolled out within a short stabilization phase lasting a few weeks without disrupting operations.

At the same time, we addressed the root cause. The model had been trained on high-quality historical data generated under relatively stable conditions. Live decisions, however, depended on constraints and trade-offs that shifted from moment to moment.

Our evaluation process emphasized correctness but ignored whether recommendations succeeded once acted upon.

Closing this gap required sustained work across several development cycles rather than a single iteration.

To close that gap, we made several structural adjustments:

- Added contextual validation checks before inference

- Flagged recommendations when operating conditions fell outside learned bounds

- Redesigned feedback loops to capture decision outcomes, not just agreement

- Introduced a usefulness signal alongside accuracy in evaluation

- Set a weekly review of context-mismatch signals and a quarterly expert evaluation of decision relevance

After deployment, overrides dropped significantly. Users relied on the system more confidently, even though raw accuracy metrics barely changed. In practice, we adjusted how the system behaved without changing its core.

What we took from this

High-quality data and strong accuracy metrics are necessary, but they don’t guarantee that a system supports real decisions. Accuracy can remain stable while usefulness erodes under changing conditions.

This case reinforced a few principles I treat as non-negotiable:

- correctness does not imply relevance

- context must be explicitly represented, not assumed

- feedback loops should measure outcomes, not compliance

- expert judgment remains essential, especially when metrics look “fine”

The system improved not because it became more sophisticated, but because it became clearer about where its reasoning applied – and where it didn’t.

We approach every case at Aristek Systems with the same level of care, looking beyond metrics to understand how systems behave in real decision contexts. If you have a gut feeling that something in your project needs a closer look, we’d be glad to talk it through.