By the end of 2025, the AI market in education is expected to reach a staggering $6 billion.

What does this tell us? Simply put, we need to keep learning how to work alongside AI – both teachers and learners alike.

However, AI also comes with risks. Cyber threats, data privacy concerns and the constant need to update digital skills due to rapidly changing technologies can make AI tools more difficult to use.

In this article, we explore three of the most pressing ethical issues surrounding the use of AI in education.

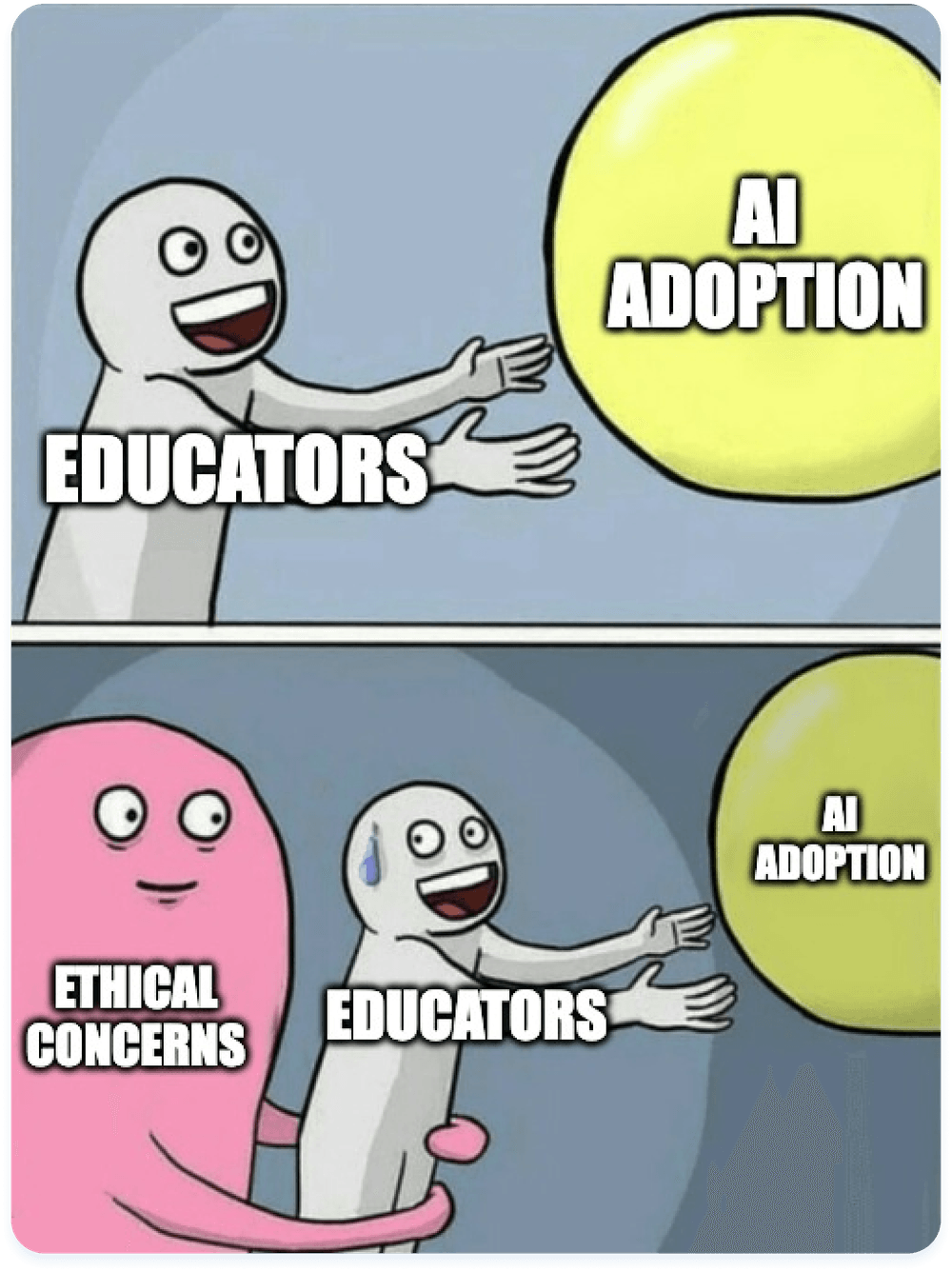

AI perception in education

Last year’s analysis by Springer Nature found that 84% of educators are open to students using AI, while 16% remain opposed. This highlights a growing acceptance of AI in education, as most teachers recognize its potential benefits.

However, the divide also reflects ongoing concerns about its impact on learning and ethical use. We have previously discussed general teachers’ concerns in a dedicated article – check it out to learn recent insights.

The Philologists’ Association of North Rhine-Westphalia is one of the first teachers’ associations to survey its members on AI in schools systematically. A survey conducted from January 5 to 21, 2024, gathered responses from 853 teachers across various school types.

AI usage has more than doubled within a year. Younger teachers (under 35) are the most open to AI, with 66% using it in their work – a significant jump from 29% in 2023.

Middle-aged teachers (35–55) show moderate adoption (48–51%), while those over 55 remain the most hesitant, with only 28% using AI and minimal growth in acceptance.

Although concerns about AI use have decreased, 52% of respondents still do not use AI in their work. Most surveyed (45%) see ChatGPT & similar tools as more of an added burden, while 18% find them a clear burden.

This aligns with the fact that many respondents simply don’t have the time or flexibility in their daily work to explore new technologies with an open mind.

By the way, North Rhine-Westphalia has launched a two-year AI pilot in schools on February 1, 2025. With 25 schools involved, we’ll be watching how AI applications can support and improve teaching.

Despite all the advantages that AI and digitalization offer, many people in the education industry view these technologies with skepticism. Some even see them as a threat. What exactly scares them?

Concerns about AI in education

- Fear of job losses due to digitalization and AI

- Feeling overwhelmed by new technologies

- New possibilities for data misuse

- Fear of losing autonomy

- Concern that digital processes will make everyday work even more hectic

- Fear of losing control

- General concerns about change

Given these challenges, AI ethics provides a framework for ensuring the responsible development and use of AI in education.

The UNESCO recommendation defines AI ethics as a systematic, evolving framework of interdependent values, principles, and actions. It serves as a guiding foundation for evaluating and governing AI technologies – prioritizing human dignity, well-being, and harm prevention while being rooted in the ethics of science and technology.

Below, we’ll look at the top three ethical considerations around the use of AI in education.

1. Plagiarism and cheating

According to Microsoft’s study on AI in education, plagiarism and cheating are top concerns for teachers (42%) and educational authorities (24%).

Students themselves seem to be increasingly unsure about how they can use AI in a scientific context without violating the rules. More than half of students (52%) worry about being wrongly accused of academic dishonesty.

Some universities are trying to protect themselves against attempts at fraud, sometimes with self-commitment declarations, and sometimes with plagiarism software. Since not all universities have developed detailed guidelines yet, it is recommended to follow these general principles:

- AI tools must be cited like any other source. Student papers that lack proper attribution may be considered plagiarism or academic misconduct.

- AI-generated content is not a scientific source but more like an internet search result. Even when cited, authors are responsible for verifying the accuracy and relevance of AI outputs.

- Assignments and exams must reflect students’ own efforts. While AI can assist with content outlines and structures, students must critically engage with these tools and take full responsibility for their work, including text, images, diagrams, and bibliographies.

During the learning process, students can create a log to keep track of which tools are used for which sections. Here is an example:

Overall, the key to ethically integrating AI in education is to ensure AI-generated content supports learning rather than replacing original student work.

Educators must set clear guidelines on responsible AI use, emphasizing transparency, proper attribution, and critical thinking to maintain academic integrity.

2. Data privacy and security

The term “privacy” is often discussed in the context of data protection, security, and safety – concerns that have gained increasing attention in education.

Regulations like the GDPR in Europe and the CCPA in the U.S. have set important standards for safeguarding personal data, including that of students and educators.

In the education sector, institutions and EdTech companies must rethink how they collect, store, and use personally identifiable information (PII) to ensure compliance and build trust. As AI-powered learning tools become more common, investing in data security is essential to prevent vulnerabilities, unauthorized access, and potential misuse of student information.

3. Bias and fairness

AI makes mistakes as part of its learning process, which involves recognizing patterns. Like humans, machines sort, classify, and generalize the objects they learn.

However, machines do not create new realities; all AI decisions are based on real data. Since biases and prejudices exist in the real world, they are also reflected in the datasets we provide to AI. What machines lack, compared to humans, is the ability to critically think about their decisions. Machines are not moral beings and – at least for now – lack self-awareness.

Incidents of bias and discrimination in a number of intelligent systems have raised many ethical questions regarding the use of artificial intelligence. A few notorious cases include:

- AI text detection bias (global, 2024). Studies reveal that GPT detectors disproportionately misclassify non-native English writing as AI-generated due to lower linguistic complexity. Research on TOEFL essays found a 61.3% false-positive rate, unfairly penalizing non-native speakers. Enhancing vocabulary reduced misclassification, while simplifying native writing increased it, exposing inherent bias in detection methods.

- AI image generation bias (Google, 2024). Google’s Gemini chatbot faced backlash for generating historically inaccurate images, including people of color as Nazi soldiers, due to an overcorrection in diversity representation. The controversy led Google to pause the tool, with executives admitting to flawed implementation.

- Standardized testing bias (UK, 2020). In response to the COVID-19 pandemic, the UK government used an AI algorithm to predict student grades for A-level exams when in-person testing was canceled. The algorithm disproportionately downgraded students from disadvantaged schools. Public backlash led to the system being scrapped, and students were instead awarded their teacher-predicted grades.

- AI-powered admissions systems fairness issues (USA, various years). Some universities and schools have experimented with AI-based admissions screening tools to assess applicants. However, practice has shown that these systems can inherit biases from historical admissions data, favoring applicants from privileged backgrounds while disadvantaging students from underrepresented groups. In some cases, AI has disproportionately recommended rejection for applicants from lower-income neighborhoods or specific ethnic backgrounds.

Detecting and addressing bias in AI in education requires robust AI governance – tailored policies, practices, and frameworks that guide the responsible use of technology in schools.

By monitoring AI systems closely, educational institutions can identify and mitigate biases in algorithms, ensuring that AI tools promote fairness, equity, and inclusivity for all students.

Here are three key tips from Aristek’s software development team on how to prevent biases in AI tools:

1. Select the right learning model

For supervised models, involve a diverse stakeholder team – beyond just data scientists – trained to prevent unconscious bias. For unsupervised models, embed bias prevention tools in the neural network so it can learn to identify biases.

2. Assemble a diverse, well-trained team

Ensure that the team responsible for selecting training data includes experts from various disciplines. This diversity helps counteract unconscious bias and ensures a balanced approach.

3. Train on complete, balanced data

Use high-quality datasets that accurately reflect the true demographics of the target group. This is essential for producing reliable and fair outcomes.

It’s also important to know what generative AI can and can’t do – and we’ve prepared great material to help you figure that out!

Best practices for effective AI compliance

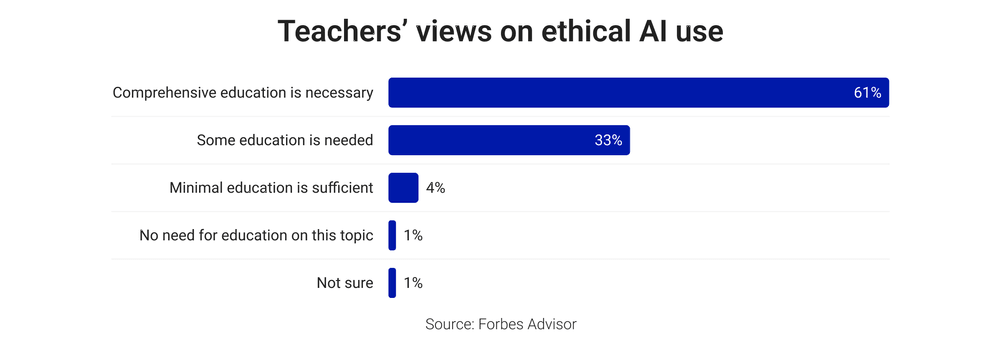

According to a Forbes survey, 98% of respondents believe students need at least some training on the ethical use of AI, with over 60% supporting comprehensive training.

Educators don’t need to be AI experts to use these systems effectively, but they should be able to ask key questions and engage in meaningful discussions with AI providers and education authorities. Understanding the ethical and practical implications of AI in schools helps ensure its responsible use.

The following guiding questions address both implementation and ethical concerns, helping teachers reflect on their professional practice:

- Have educators and content providers been properly trained and provided with all the necessary information to use the AI tool effectively, ensuring it is safe and does not allow for PII leakage?

- Do teachers and school administrators understand the AI methods and functions that underpin the system in terms of knowledge assessment?

- Does the AI tool support adaptive learning and accommodate students with disabilities or special educational needs?

Team Aristek has a lot to add to that list. To ensure the ethical application of AI in education, three key principles should be adhered to:

1. Human-centered approach

AI should support, not replace, human decision-making. It must respect human identity, dignity, and integrity, ensuring that students and teachers are treated as individuals, not just data points.

A reliable technical vendor that upholds these values can be a great starting point. Follow our guide to choosing the right AI partner.

2. Fairness and inclusion

AI systems must provide equal opportunities, avoid discrimination, and ensure all users – regardless of background – have fair access to educational resources.

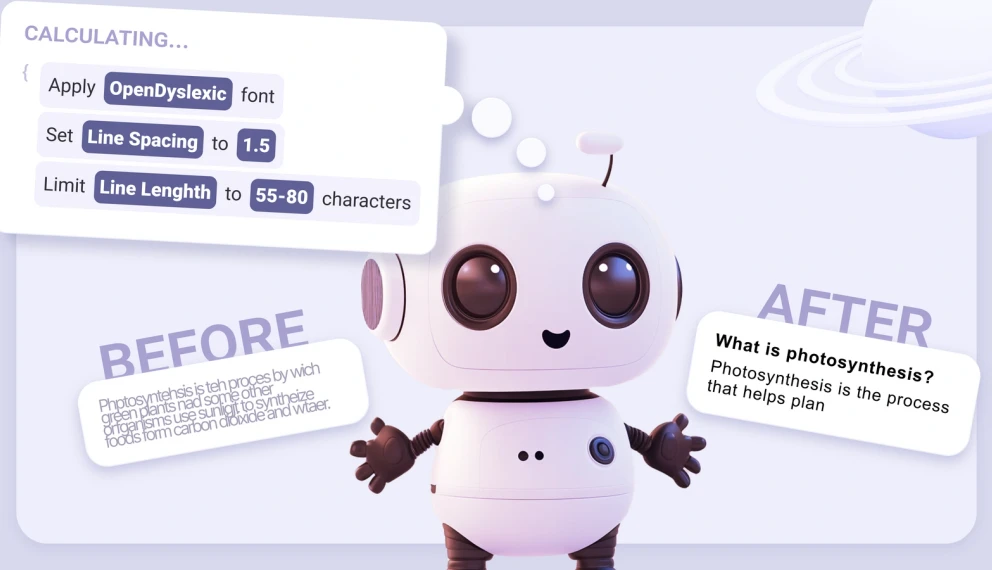

Case study: Content adaptation for dyslexic learners

Aristek’s team developed an AI solution to support learners with reading and writing disorders. This case study highlights how AI not only eases the workload for content creators but also lowers the costs of processing and updating educational materials, making learning more accessible and inclusive.

3. Transparency and accountability

AI-driven decisions should be explainable, based on clear procedures, and involve collaborative input from educators and stakeholders to ensure trust and reliability.

We suggest educators, EdTechs, policymakers, and regulators adhere to five core principles to ensure the responsible use of AI in education:

- Collaboration: Foster multi-level cooperation to adapt education to the digital age, ensuring effective integration of AI in teaching and learning.

- Openness to opportunities: Leverage AI to enhance the quality and accessibility of digital education, support innovative teaching methods, and improve infrastructure for inclusive and efficient distance learning.

- Data protection: Safeguard user privacy by ensuring AI systems comply with data protection regulations and provide clear assurances about how personal data is used and secured.

- Transparency: Ensure AI systems are explainable by clearly stating who trains them, what data is used, and how algorithmic recommendations are made.

- Security: Design AI systems to be robust against potential threats, minimize security risks, and enhance trust in AI-driven outcomes.

With over 22 years of experience in custom development, we know how to implement AI in education according to transparency, cybersecurity, and ethical principles.

Conclusion

Ethical AI use in education is an ongoing responsibility that must evolve alongside technology and regulations – gradually and thoughtfully, as sudden, drastic changes can be overwhelming.

Institutions that embrace ethical AI practices can enhance teaching, promote trust, and create a more responsible and inclusive educational environment.

In parallel, an extremely important aspect for EdTech companies is the sovereignty of AI applications. These should be operated in a highly secure environment, compliant with data protection regulations, and designed to prioritize transparency, fairness, and user control.