What would you choose when applying for a job: interacting with AI or a human recruiter?

Given the choice, 78% of job seekers prefer recruiters who use AI. AI-powered interviews often uncover more useful insights than human-led ones, and recruiters even rate these results higher.

Yet when it comes to final hiring decisions, humans still lean on standardized tests and traditional interviews.

So, can AI truly compete with human recruiters, and how can we strike a balance between candidate satisfaction and business outcomes?

In this article, we’ll explore how AI can empower HR professionals, where caution is needed, and how to introduce AI technologies into the hiring workflow seamlessly.

The role of AI in HR

Finding the right candidates can be time-consuming, and a slow hiring process can drive top talent away – a major challenge in a competitive job market.

60% of employers expect AI to transform their business by 2030, and 86% highlight advancements in AI and information processing as key drivers.

In fact, AI is viewed as so valuable that 94% of employees would accept AI monitoring of business activities if it boosted productivity, and 46% would consider leaving a job that didn’t support AI tools in favor of a company that did.

In HR, AI technologies generally refer to the following types:

For a closer look at what generative AI can and cannot do, see the dedicated material.

By using these technologies (both as standalone tools and as modules integrated into HR and business systems, such as HRIS, CRMs, or talent management platforms), HR executives can:

- accelerate HR processes and free up resources in recruitment, onboarding, and performance reviews

- automate routine tasks to boost efficiency and productivity

- reduce unconscious bias in job ads, evaluations, and compensation analyses

- enhance employee and candidate experience through personalized interactions

- support faster, smarter decision-making with AI-powered insights and data analysis

- analyze workforce trends to improve retention and predict turnover risks

The targeted use of AI in recruiting is not just efficient, but a strategic advantage for HR professionals.

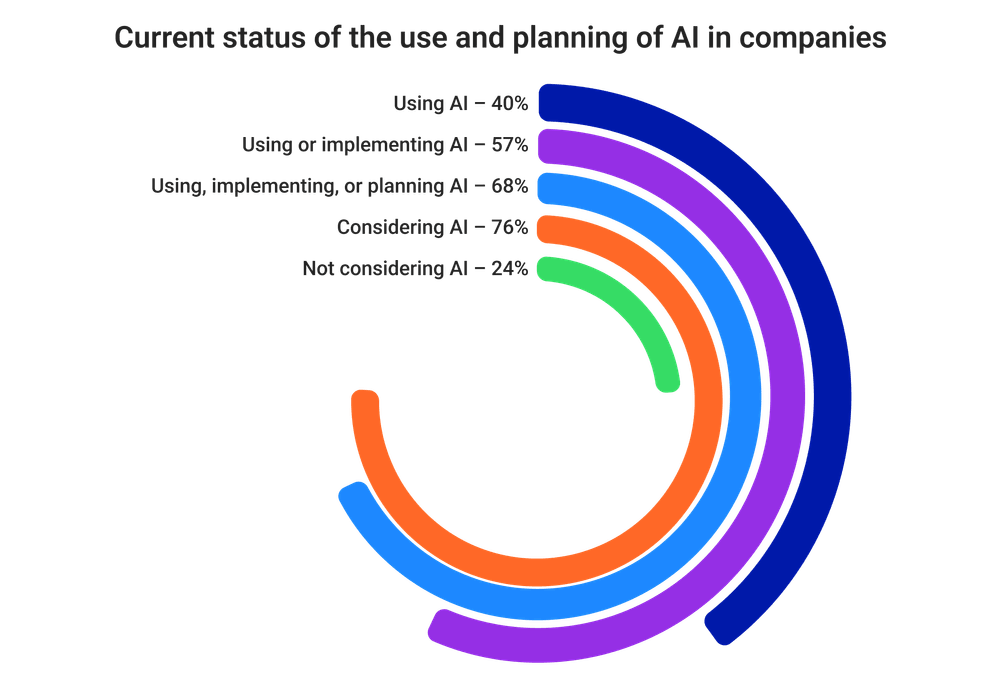

Currently, 40% of companies already use AI in HR practices, and nearly six in ten are either using it or in the process of implementation. Including those planning adoption, the share rises to over two-thirds, showing just how significant AI’s potential is.

When company divisions plan AI adoption, most expect to implement it within two years (especially in HR, finance, and marketing). Earlier, we explored several cases across different industries.

The legal basis for AI in HR

In May 2024, the Council of EU Member States passed the AI Act, a landmark law that establishes clear obligations for both providers and users of AI systems. The Act uses a risk-based framework, classifying AI systems into minimal, high, and unacceptable risk categories.

High-risk AI (such as tools for recruitment, performance evaluation, or task assignment) must comply with strict requirements. Systems deemed unacceptable risk will be banned entirely starting February 2, 2025, while other regulations will gradually come into effect from August 1, 2024.

The AI Act complements other key regulations:

- GDPR (General Data Protection Regulation) remains central, requiring lawful, transparent data processing, data minimization, and privacy protections. It also ensures that employees have rights to human intervention and explanations for automated decisions, critical for HR applications.

- Additional legislative initiatives are underway, including the AI Liability Directive and updates to the Product Liability Directive, which aim to clarify accountability and legal responsibility for AI-related errors or harm.

Globally, other developments are shaping AI governance:

- In the U.S., there is no single federal AI law yet, but several initiatives are emerging:

- The “No Robot Bosses Act” proposes mandatory human oversight for automated hiring decisions.

- State-level laws, such as New York City’s Local Law 144, require independent bias audits for automated HR tools.

- Other states, including Illinois, Virginia, and Utah, are introducing regulations on impact assessments, transparency, and accountability.

- At the international level, the Framework Convention on Artificial Intelligence, adopted in September 2024, seeks to guide AI development in line with human rights, democratic principles, and the rule of law, and is open for global accession.

Together, these regulations create a complex but structured legal environment for HR professionals using AI, emphasizing transparency, fairness, and accountability at every stage.

How AI eases the HR workload in practice

Despite the comprehensive legislative framework, there is still much work to be done. Let’s consider how AI makes a real difference for HR executives within this context.

Automation

One of AI’s most obvious benefits is automating repetitive tasks. For example, AI-powered chatbots can handle FAQs from employees, freeing HR managers to focus on trickier issues that require more attention

Key applications:

- Chatbots for 24/7 employee support

- Automatic delivery of HR documents, policies, and workflows

- Streamlined approval processes and routine HR notifications

In practice, teams already see AI reducing “back-and-forth” interruptions that used to eat hours from their day.

Recruiting, screening & onboarding

AI doesn’t just sort resumes—it guides recruiters on where to spend their time, identifies top candidates, and streamlines pre-selection using people analytics. This can cut early-stage screening time in half and speed up the hiring cycle.

Beyond hiring, AI smooths the first days for new employees by automating onboarding materials and offering real-time answers via chatbots, reducing the burden on HR teams.

Key applications:

- Resume screening and ranking with people analytics

- Programmatic job ad placement in the right channels

- Automated pre-selection and candidate shortlisting

- AI-powered onboarding portals with tailored materials

Analyzing employee data

AI can flag issues before they snowball. By analyzing engagement data, it can reveal patterns hinting at low morale or potential turnover.

Proactive HR teams use these insights to address concerns before they affect performance or retention.

Key applications:

- Engagement data analysis

- Turnover risk prediction models

- Early warning systems for low involvement

- Personalized recommendations for retention

Building inclusive teams

AI can support diversity and inclusion efforts. Unbiased applicant selection ensures every candidate is evaluated fairly, reducing the risk of unintentional favoritism.

It can also assist applicants with writing or speaking disorders by offering accessible formats and adaptive communication tools. Experienced HR managers often point out that while AI isn’t a magic bullet, it removes many unconscious biases that human teams inadvertently carry.

Key applications:

- Bias detection and correction in job ads and evaluations

- Accessible screening support for candidates with special needs

- Diversity analytics dashboards

- Monitoring fairness in promotion and compensation

Workforce planning

AI can predict employee turnover or staffing peaks based on trends and global developments, giving companies a heads-up to hire, redeploy, or scale down. This kind of forward-looking insight allows HR teams to plan with far more confidence than guessing from last year’s numbers.

Key applications:

- Turnover and retention forecasting

- Scenario modeling for staffing needs

- Predictive analytics for succession planning

- Alignment of workforce strategy with business goals

Better not to forget that AI in HR deals with highly sensitive personal data. The EU AI Act classifies such applications as high-risk, meaning strict compliance is mandatory.

Experienced teams know that ignoring these regulations can lead to both legal headaches and serious reputational damage – let’s explore the AI limitations in more detail.

The limits of AI in hiring

One thing about AI pitfalls is certain: AI operates on its own logic and isn’t yet capable of reading the subtleties of human behavior. Every interaction carries layers beyond the factual content, and AI can easily miss those nuances, leading to inaccurate predictions or missteps.

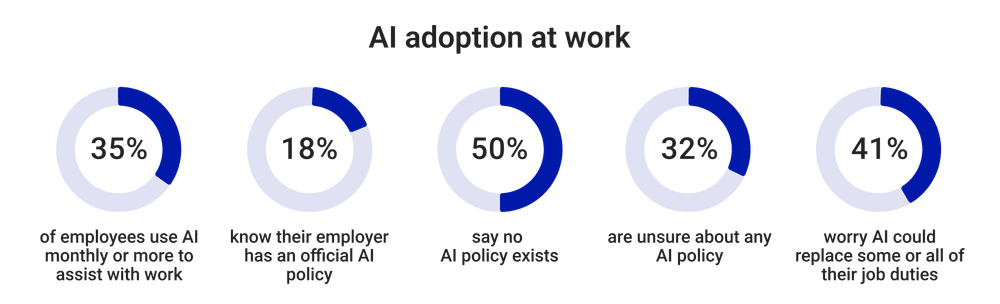

In addition, many employees are using AI on their own, often without clear rules from their employers. According to the APA’s 2024 Work in America survey, 41% of U.S. workers worry that AI could eventually replace part or all of their jobs. These fears are not unfounded: AI could potentially displace up to 92 million positions.

At the same time, 22% of business representatives admit they are using it only to a small extent. Respondents cite several reasons why AI is not yet used or considered relevant:

- Organizational barriers: Lack of time to explore AI tools and insufficiently digitalized processes.

- Acceptance issues: AI is seen as adding little value, not widely accepted within the company, or restricted by data protection concerns.

- Knowledge and skills gaps: Unclear use cases or a lack of employee expertise to work with AI.

- Position-related concerns: Managers in particular point to limited time to engage with AI tools and insufficient skills among staff.

At the same time, most workplaces still haven’t established clear policies to guide its use.

It’s not surprising that, in light of this news, AI specialists are noticing very specific limitations of AI in their daily work. Here are the main ones HR teams have observed:

Limited datasets

AI is only as smart as the data it learns from. If the training data is biased (what some call “dirty data”) the AI will simply reinforce those biases. Experienced HR teams warn, “garbage in, garbage out,” especially when it comes to hiring predictions.

Human touch matters

Chatbots can technically conduct interviews, but they struggle to grasp human context. During skilled labor shortages, relying solely on AI is risky – applicants want to feel the workplace vibe, connect with future colleagues, and experience the nuances of human interaction. Some things AI simply cannot understand.

The black box problem

Most employees barely understand how AI reaches its conclusions. When decisions feel opaque, teams often underutilize these tools and skip feeding them additional data, which hinders the AI’s learning.

Limits to monitoring and learning

In theory, AI could analyze facial expressions, gestures, and speech to assess traits like teamwork or resilience. In practice, employees don’t want to be constantly watched. EU regulations like the new AI Act make broad deployment of emotion-monitoring models illegal.

Skepticism and adoption

Rolling out AI is less about the tech itself and more about how people accept and use it. Employees who don’t understand the tools (or don’t trust them at all) will avoid using them, making the investment ineffective. Clear communication, training, and a step-by-step approach to adoption help build confidence and ensure AI supports daily work.

Data protection concerns

AI in HR processes handles highly sensitive employee and applicant data. Even with consent agreements in place, the sheer volume and sensitivity of the information mean companies must take strong precautions. Robust security measures like encryption, pseudonymization, and strict access controls are essential to avoid both legal risks and reputational damage.

Everything you need to know about AI security in one whitepaper

Discover how to protect your AI systems from evolving security risks, from data breaches to attacks on generative AI.

Interpretation gaps

AI can screen resumes and highlight strong candidates, but it will only find what it is trained to look for. Subtleties that HR professionals value – like experience in another industry or time spent abroad – may be overlooked. That’s why a competent approach to model training and ongoing human supervision is essential.

Striking the balance: AI + human collaboration

AI can speed up the hiring process, but it can’t replace human intuition. The real value comes when both work together, where each covers the other’s blind spots.

1. AI for screening and data-driven insights

Before you even open the first resume, get a clear picture of your needs, goals, and where your bottlenecks are. Teams that dive in without a plan quickly hit walls – AI can spit out patterns, but it won’t know which metrics actually matter for your hiring.

Start small with pilot projects: let the system sort candidates, highlight trends, and point out gaps, then see how those insights match reality. As one HR lead put it, “AI flagged 30 resumes we would’ve skipped, but half turned out to be gold.”

2. Humans for cultural fit and final decision-making

AI can do a lot, but it doesn’t pick up on the subtle stuff – attitude, team chemistry, adaptability. Experienced recruiters swear by keeping humans in the loop for interviews and final decisions. Consulting with other companies or external experts can help sharpen these judgment calls.

3. Hybrid models that improve both satisfaction and performance

Continuous evaluation isn’t just a checkbox; it’s how you avoid the “AI in theory, chaos in practice” trap. The sweet spot is a hybrid approach: let AI handle the heavy lifting (data, patterns, early screening) while humans focus on fit and context. Scale gradually, measure results, and tweak the system as you go.

Conclusion

When used thoughtfully, AI can handle repetitive tasks, spot patterns in candidate data, and surface insights that would take humans hours to uncover. The key is letting the technology do what it does best, while HR teams focus on judgment calls, cultural fit, and meaningful human interaction.

Even when AI is just a supporting tool, applicants need to know it’s in play. Teams that nail this balance – efficiency without losing the human touch – see faster hiring cycles and better retention. For those curious about how to integrate AI responsibly into HR, there’s a clear path forward.