Learn the difference between a data warehouse vs data lake. Everything you need to know in one article.

TLDR: data warehouse vs data lake

If you only have 30 seconds, just read the TLDR. But the devil is in the details, so if you have a few minutes, keep on reading – it’s worth it.

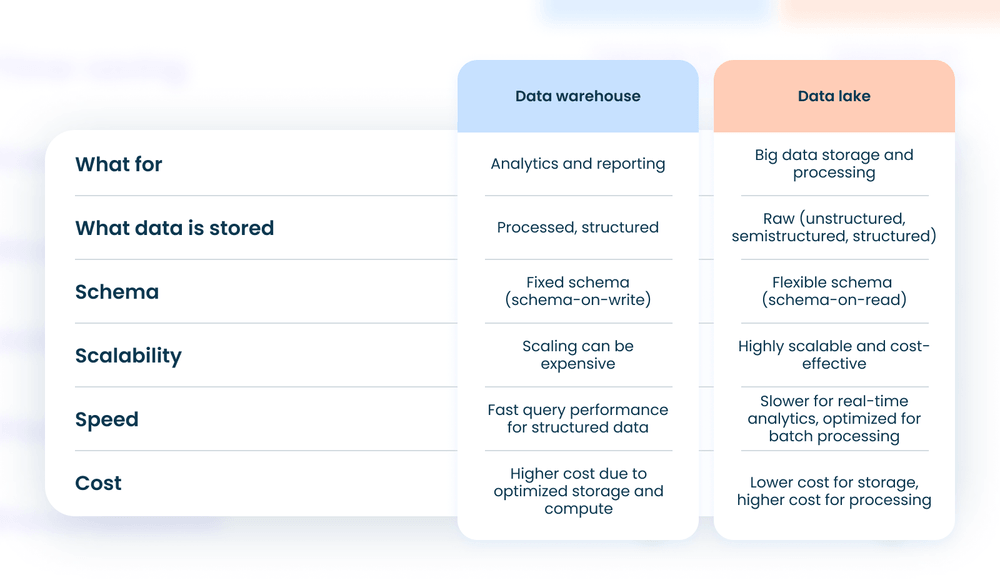

Data warehouse stores processed data that’s ready for analytics. Before data is moved to the warehouse, it gets structured and cleaned. That’s why even non-technical users can analyze data from a warehouse. For example, management can create charts with BI tools.

Data lake is a storage for raw data. You can move any files there and process them later. The idea is that you never know what data will be useful for analytics in 5 years. And because storage today is cheaper than processing, you can collect a ton of data first and maybe process it later. But data still needs to be processed before it’s analyzed. So data lakes can deliver more insights, but they are harder to work with.

Data lakehouse offers the best of both worlds. Technologically, a lakehouse is similar to a data lake but has a predefined schema and stores more metadata.

Get free data engineering advice

Do you need a data lake or data warehouse? We will analyze your workflow and help you make up your mind.

What’s common between data lakes & warehouses

Let’s get some context first. Why do we even need data warehouses and data lakes when there are regular databases?

A typical database is made for OLTP (online transactional processing). Meanwhile, both data warehouses and data lakes are great for OLAP (analytical processing).

Here’s what it means, in general:

Data integration

A database is much smaller. Think gigabytes, compared to terabytes or petabytes in lakes and warehouses. That is because databases store compact transactional data.

Meanwhile, data warehouses can merge data from many different databases. Data lakes go even further and can store all sorts of unstructured data: JSON files, text files, and even images or videos.

Historical information

In a database, there’s often no track of it because logs don’t keep everything. Databases can track historical data, but this would slow down the transaction process. That’s why databases mostly just keep current info.

If your client changes their address, the database will update to the new address, but the old one will be lost. Without historical info, you won’t see the trends.

Data warehouses and lakes solve this problem because they capture changes in data.

Query complexity

Both data lakes and warehouses are great at complex queries, while databases are made for simple queries.

Databases are pretty bad at complex queries. They rely on normalized schemas, so data is kept in tables. This makes databases very fast at simple queries, like retrieving an order by its ID. But when it comes to complex queries, there are too many joins from different tables – so complex queries become too slow.

Meanwhile, data warehouses and data lakes are great at them because they use denormalized schemas. There are fact tables that hold the measurable data, and dimension tables that contain descriptive attributes. This way, there are much fewer joins.

So if you want to analyze sales trends, you’ll need a data lake or a warehouse. But if you need to keep track of order statuses or process payments, databases are more efficient.

When to get a data warehouse

Simply put, a data warehouse is a giant database that’s optimized for analytics. It aggregates structured data from multiple sources and it’s great for deep analytical reports.

Companies have many different sources of data: databases, API connections with third-party tools or simply CSV files. A data warehouse aggregates all of your operational data in a single place. This way you can automatically create complex reports. You can check how your website redesign affected traffic and sales – with no need to manually pull data from Salesforce or Google Analytics.

Data warehouses are built so that low-technical users can create no-code reports. Data is moved from the sources into a single warehouse, where it can be analyzed with a BI tool.

How do data warehouses work?

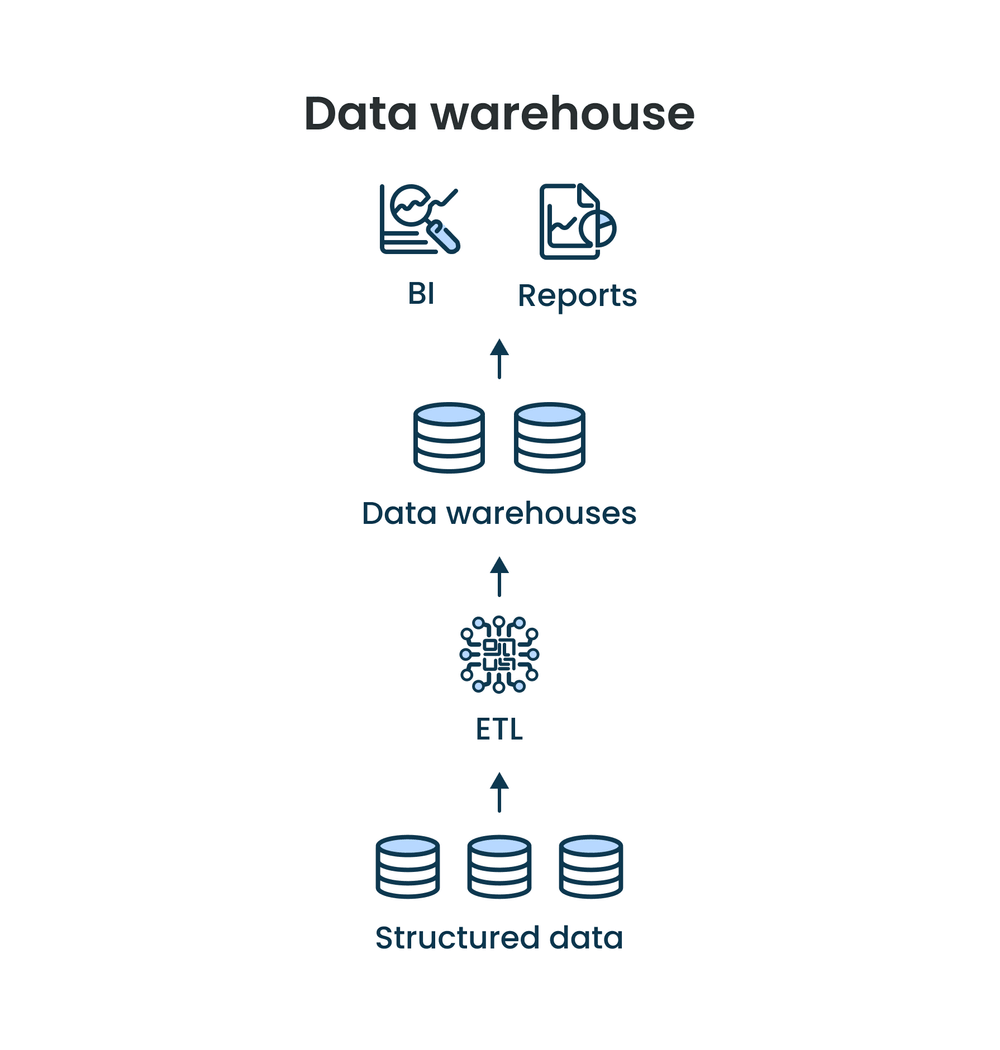

Most data warehouses go through the ETL process: extract, transform, load. Here’s how it works:

- Extract. Raw data gets extracted to a staging area either through direct querying or via API.

- Transform. The data gets cleansed from duplicates and errors. It also gets filtered, sorted and applied with the business rules.

- Load. Finally, data is loaded to the warehouse for storage and analysis. Here, it’s organized into tables or data structures for easy access and querying.

Relevant content: How to put AI to use in business in 7 essential steps

Data warehouses have predefined schemas. It means that data engineers had to think in advance about how data is stored at a warehouse. It also means that there are limits on the types of data that get analyzed. To store unstructured raw data, you need a data lake.

Real-life example

We built a data lake on our AI chatbot project. It was the perfect fit with the LLM-model.

A data lake is much easier to start with because it has an undefined schema. That’s called schema-on-read because it’s only applied during querying.

But when you process data from a lake, you still need to put it in a data warehouse. That’s right, when you have a data lake, in most cases you also need a data warehouse.

The problem with data lakes is that they can turn into data swamps. It’s tempting for companies to collect every bit of data they can. But it turns out, that’s not always a good idea. Data storage can get too expensive, and data processing becomes too difficult. There’s a joke that says, data lakes are named after Lake Karachay – a nuclear waste dump.

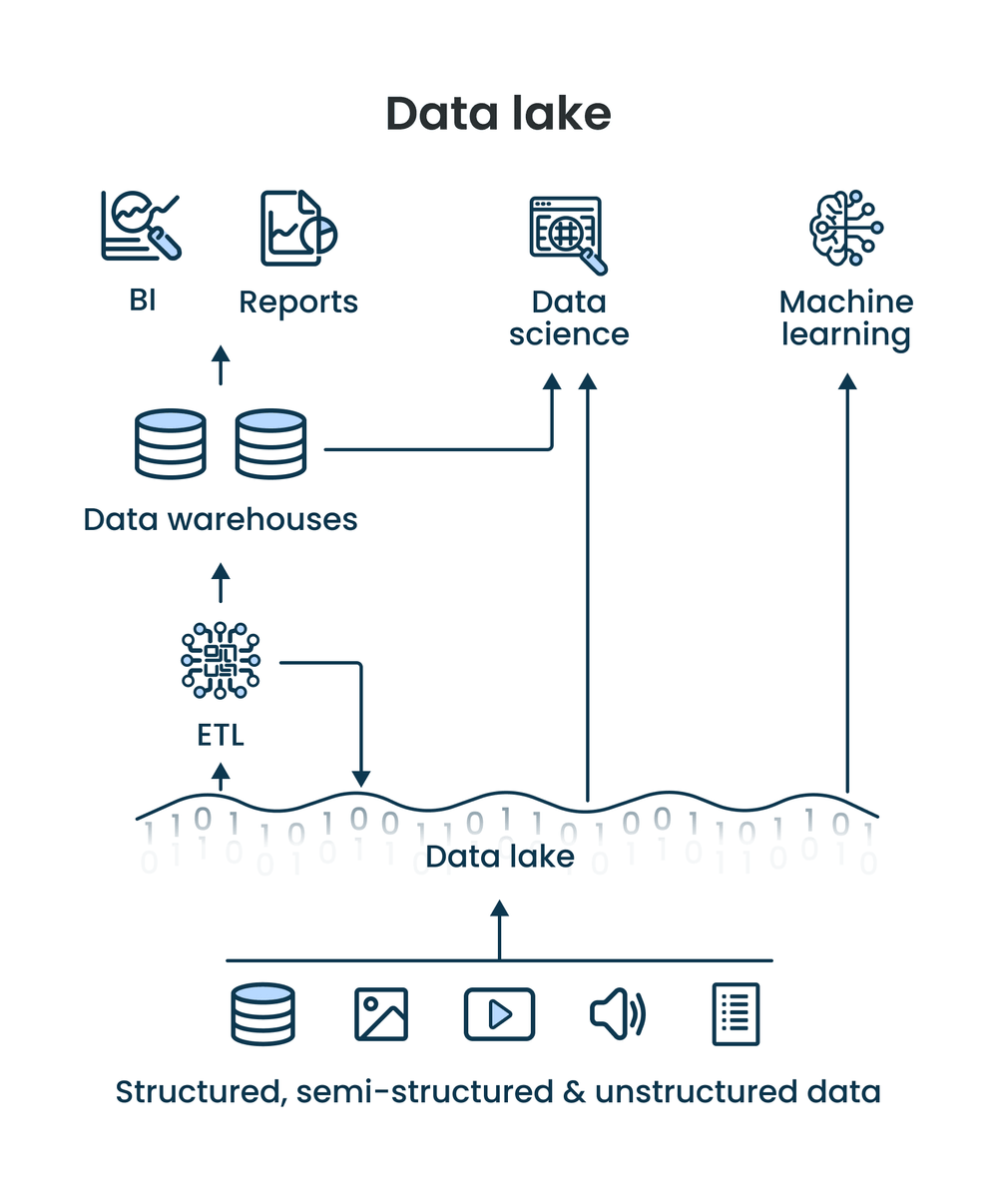

How do data lakes work?

Data lake is like a data warehouse, but backward. So instead of the ETL pipeline, data lakes use ELT: extract, load, transform.

- Extract. Just like with a data warehouse, your data gets automatically extracted from all your sources: databases, APIs, IoT devices, etc. It’s possible to retrieve data in bulk or in real time.

- Load. Data gets loaded into the data lake and stays there in native format until it’s required. Because data lakes store unstructured data, it’s easier to upload data just in case. The key is that data stays in the lake without any transformation.

- Transform. Before analyzing data, it still needs to get transformed. But in ELT, it’s the data scientists who define how to process the data. Just like in ETL, raw data is cleaned, enriched, and converted into a structured format.

- Load. Sometimes ELT is actually ELTL, because after transformation data still goes into a data warehouse. But you can also use it for your data science and machine learning projects.

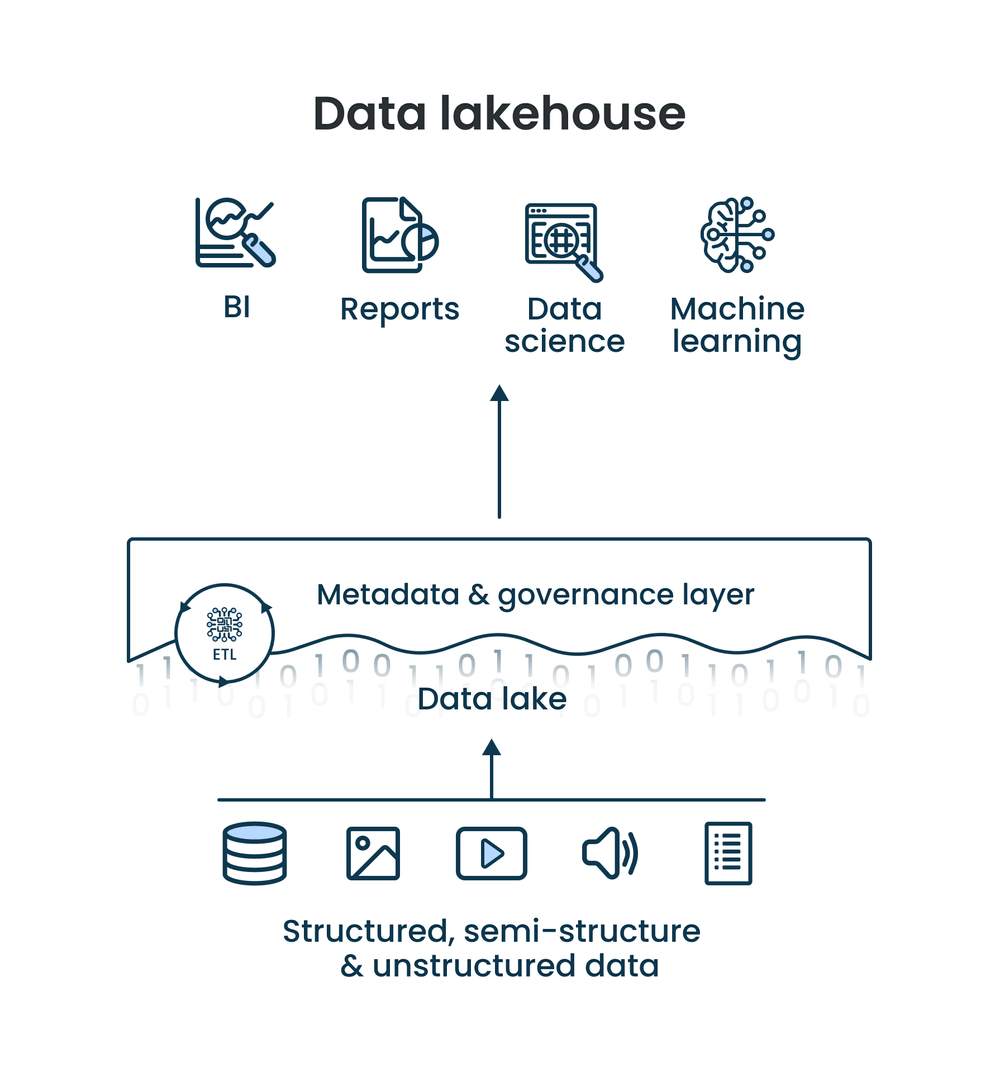

Data lakehouse: best of both worlds

Let’s recap the issues with data lakes and warehouses:

- Data warehouses are organized but too expensive for big data and AI projects. That’s because every time your data goes into a warehouse, it needs to be processed and organized. For big data, it gets too difficult. That’s also the reason why data warehouses are not real-time.

- Data lakes are cheaper but can turn into data swamps if you collect too much low-quality data: lots of duplicates, inaccurate or incomplete data.

Data lakehouses keep the advantages of both, and remove the disadvantages. They are cost-effective, but scalable and organized. A data lakehouse is great for both predictive and prescriptive analytics.

Your analysts can work with a lakehouse as if it was a regular data warehouse. But your data engineers and data scientists can set up machine learning experiments in the same place – as if it was a data lake.

The best part is that there’s no real downside. The only extra expense is the meta layer, which costs very little compared to having to process all data for a data warehouse.

Real-life example

A lakehouse is great when you need data for both descriptive and predictive analytics. That’s exactly the case with the AI solution we’ve built.

How do data lakehouses work?

On the surface, lakehouses look just like regular data lakes with raw data. This data also can be structured, semi-structured, or unstructured.

The difference is that data lakehouses have another layer for metadata and additional governance. This layer keeps metadata of all the objects in the lakehouse. This way, it’s just as easy to organize data as with a regular data warehouse.

Can analytics be collected without a data warehouse or data lake?

Sure. It’s still possible to analyze data without data lakes, warehouses, or lakehouses. But with no proper infrastructure, this process is manual – someone has to go and pull data from every source.

There are some problems with that, though:

- No scalability. Data always piles up: companies buy more tools, get more clients, and hire more employees all the time. At some point, it gets impossible for humans to process it all.

- Data needs to be updated. Data doesn’t just pile up – it changes, too. Your employees need to update all the spreadsheets, and some spreadsheets always need an update. After a while, you’ll have to let some spreadsheets go.

- Privacy and compliance risks. Some data is private by law. Other information is simply not for low-level employees. If you automate your data pipeline, you can easily manage access to your data.

- People make mistakes. Our brains are not built for repetitive work. When someone copies and pastes spreadsheets all day, there will inevitably be mistakes. These mistakes can cost a lot.

If you are serious about data analytics, you need proper infrastructure.

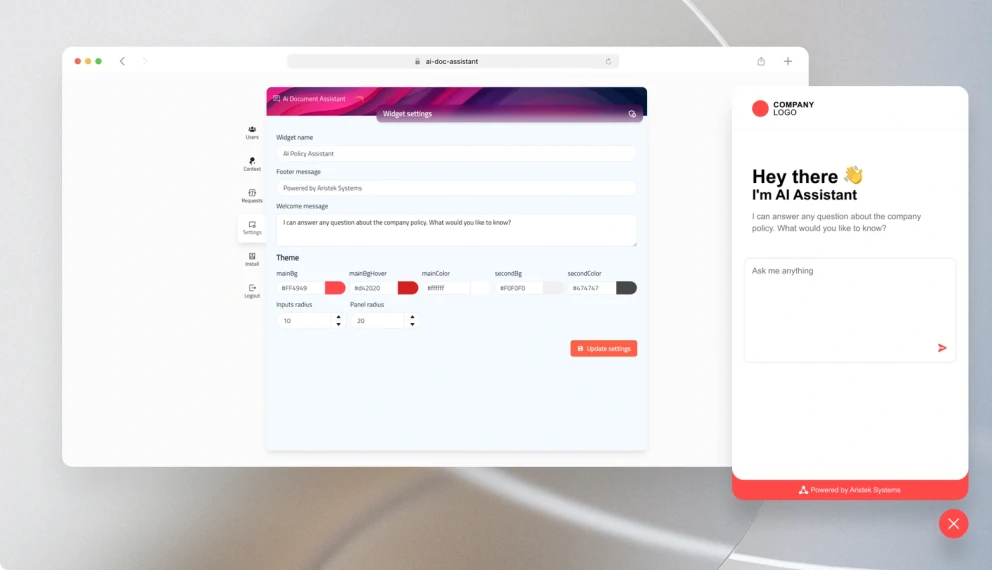

Real-life example

We automated data infrastructure, first. With this strong foundation, we built an AI assistant that keeps track of the company’s paperwork.

How to get modern data infrastructure

You need data engineers and data scientists. The good thing is – once you build your data infrastructure, maintenance is easy.

In most cases, it takes less than a year to set up a data warehouse. Also, if you want to build data infrastructure fast, you’ll need more than one data engineer.

But the more people you hire for development, the more will stay for maintenance. And when everything works well, maintaining infrastructure is not a full-time job. It often doesn’t make much sense to hire in-house data engineers. Especially, since this is a very well-paid position with a median salary of over $150,000.

Outsourcing is cheaper and faster. You can hire several engineers to build data infrastructure quickly. After launch, you can keep just one data engineer part-time for maintenance. But that engineer will know everything about the project because they built it.

We provide data engineering services here at Aristek Systems. We keep only the best data engineers and data scientists on board. They build data infrastructure from scratch or at any point in the project. Reach out for a free consultation.

Build your data infrastructure

Finally become a data-driven company. Automate your data pipeline with a data lake, warehouse, or even a lakehouse.