AI can be dangerous. The stronger it becomes, the more people worry about AI compliance. There are good reasons for this. AI development goes through enormous datasets; they can misuse private data, cause discrimination, or simply generate false predictions.

That’s why we hear much about AI regulation: the EU AI Act or the AI Bill of Rights in the US. But they are not enforced yet. Instead, we have many local and industry-specific laws that regulate AI.

For now, companies need to focus on privacy regulations. AI solutions complicate compliance with GDPR, ISO 27001, and others. If you’re dealing with clients from the EU, US, or China, be on guard.

This article lays out major regulations for AI compliance. Let’s dive in.

Note: this isn’t legal advice. Use this article for general understanding, but do consult your legal advisors before building an AI system.

Looking for AI Compliance Consultant?

Our AI development engineers and analysts are in a heavy focus on security and compliance issues and could guide you on the procedures actual for 2024.

The EU AI Act is Coming. Why It Will Be Important Worldwide

So far no country has a detailed legal framework for AI compliance. The regulations are still in the Wild West. Done wrong, AI can unintentionally mess up grades, recruitment, road safety, justice, and the list goes on. But this should soon change, starting with the EU.

EU Artificial Intelligence Act. It is likely to become law sometime in 2024. Like GDPR, the EU AI law will affect the entire world, including the US. If your AI will have users in the EU, you’ll have to comply.

The act is focused on high-risk AI applications, protecting people from the worst side effects of AI.

Here’s how it works. It puts all AI into 4 risk categories:

- Unacceptable risk solutions are outright banned. These include social scoring systems, biometric classification, and facial recognition in public places. Yet there can be some exceptions for law enforcement.

- High-risk solutions will be regularly assessed by the EU regulators. This includes products that require EU product safety: toys, medical devices, cars, aviation, and lifts. But it also applies to critical industries, like education, employment, law enforcement, or legal interpretation.

- General purpose and generative AI will have to be transparent and safe. Advanced models like GPT-4 and DeepSeek will be audited. But even simple generative AIs should disclose that the content is AI-generated, and it should never generate illegal content.

- Limited risk models will have minimal transparency requirements, but won’t have to be evaluated. For example, such solutions will have to disclose when users interact with an AI.

Fines for non-compliance can be severe. They’ll go up to €36 million or 7% of yearly turnover – whichever is higher.

Other countries will be looking at the EU Act. Meanwhile, they are rolling out regulations, too:

- Canada’s Artificial Intelligence and Data Act;

- China’s Administrative Measures for Generative Artificial Intelligence Services (Generative AI Measures);

- Brazil’s Bill 21/20;

- Several other countries in the Americas and Asia are also developing their regulations.

The US doesn’t have Major AI Laws

In the USA; AI compliance is not straightforward. So far, only local and state laws regulate AI here. But in a few years, federal AI laws might come out too. Here’s the rundown.

Executive Order 14110 is the most extensive AI regulation so far.

It doesn’t have immediate consequences for most AI developers. The only requirements are for new major AI models that start posing risks to national securities. Such developers have to notify the federal government when training the systems and share the results of the safety tests. But this doesn’t apply to most AI models.

Instead, the Executive Act instructs government agencies to develop new rules soon. It also requires some federal agencies to appoint Chief AI Officers.

Blueprint for an AI Bill of Rights is a purely voluntary document. Yet, future regulations may rely on it. The blueprint has 5 main principles:

- Safe and Effective Systems. It would protect users from unsafe systems by pre-deployment testing, risk identification, and ongoing monitoring;

- Algorithmic Discrimination Protections. It protects users against discrimination, based on race, gender, age, or any other sensitive characteristics;

- Data Privacy. It requires AI systems to have built-in privacy protections, and users should have agency over how their private data is used;

- Notice and Explanation. Systems should notify users when they use AI and how it would impact them.

- Human Alternatives, Consideration, and Fallback. Whenever appropriate, users should be able to opt out of the AI tool and communicate with a person instead.

The Algorithmic Accountability Act has been introduced, but it’s far from becoming law. If passed, it would require companies to do 3 major things:

- Assess the impacts of the AI systems;

- Be transparent about using AI;

- Empower consumers to make informed choices when they interact with AI systems.

The act applies to critical decisions in healthcare, housing, education, employment, and personal life.

Locally, US regulations already require AI compliance

While general AI regulations are still yet to come, we already have laws that govern specific AI applications. Usually, these are state or local laws, here are some of them:

NYC Law 144 prohibits biased automatic recruitment tools. Before using automated employment decision tools, any company has to pass an audit proving that such tools are unbiased.

Colorado SB21-169 prohibits insurance companies from discriminating against customers. With Big Data, insurance companies could unintentionally harm protected groups. The new law protects algorithm discrimination based on race, color, national or ethnic origin, religion, sex, sexual orientation, disability, or gender.

Connecticut SB 1103 regulates the state government’s use of AI. Since early 2024 all state agencies will have to inventory AI systems that they use. Furthermore, they need to perform assessments to make sure that their AIs don’t discriminate or provide unfair advantage to anyone.

Why you should focus on privacy & GDPR

Until we have major AI regulations, companies should focus on data protection and privacy. These laws are not new, but AI makes it harder to comply than regular software.

Since there is no US federal data protection regulation, the main focus is on GDPR. But we’ll cover Californian, Chinese, and Canadian laws too.

Should the US companies care about GDPR? Yes. GDPR applies to any business in the world if it has users from the EU. That’s why even most US companies need to follow it.

However, there are local GDPR equivalents in the US and all over the world: CCPA in California, PIPEDA in Canada, PIPL in China, etc. That’s why websites all over the world ask for your cookie permission.

What is GDPR? The name is pretty straightforward: General Data Protection Regulation. Perhaps, the world’s strongest one.

It protects any data that can identify a person, directly or indirectly. You can identify a person by their name and location (i.e. John Johnson is near the Eiffel Tower). But you can track someone even without a name. Police can identify a car owner by the license plates. Tech companies can track users cross-site with 3rd party cookies. This could also be info about health, race, or political beliefs.

Penalties for non-compliance are huge. Smaller offenses result in fines up to €10 million or 2% of global turnover, whichever is greater. Major offenses can be fined up to €20 million or 4% of global turnover, whichever is higher. In 2023, Meta got the highest-ever GDPR fines, twice: €390 million and €1.2 billion.

What are the 7 key principles of GDPR? All principles are equally important for GDPR compliance. Some come from the 1995 Data Protection Directive, others are new.

- Lawfulness, fairness, and transparency. You have to identify legal reasons for data processing. You should be transparent about what you’re doing with the personal data.

- Purpose limitation. You must only collect personal data for explicit and legitimate purposes. In other words, you can’t claim to never sell cookies to 3rd parties, and then… sell cookies to third parties.

- Data minimization. Only collect data that is necessary for your purpose, never just in case.

- Accuracy. Keep personal data accurate and up-to-date. Let your users correct their data or erase it.

- Storage limitation. Store personal data only for as long as necessary. Establish clear timeframes and dispose of the data when it’s no longer needed. Some retention periods are specified in law, like on tax records. In other cases, they can be more fluid – but you need to set an appropriate time frame.

- Security (Integrity and confidentiality). Implement appropriate security measures: encrypt your data, isolate PII from other data, etc. Learn more in our article about personally identifiable information.

- Accountability. The controller (we’ll describe who it is soon) is responsible for compliance with the other 6 principles. Be prepared to demonstrate compliance to supervisory authorities upon request.

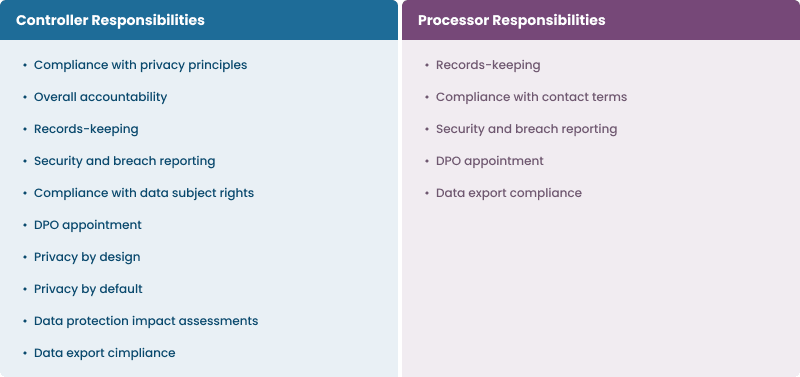

What are GDPR controllers and the processors? Under GDPR companies that handle personal data are either controllers or processors.

The status is important because controllers have way more obligations than processors. If there’s a breach on the processor’s end, the controller is still responsible for dealing with it. The status affects your relationship with suppliers and customers.

Controllers determine why and how to process data. In formal terms, they define the purpose and the essential means of processing. Some of the essential means include:

- What data to process?

- What’s the retention period?

- Should they disclose the data?

Processors process the data on behalf of the controller. They follow the controller’s instructions and can’t appoint sub-processors without the controller’s consent. They can define the non-essential means, like:

- Programming languages, frameworks;

- Security measures;

- Architectural measures: APIs, microservices, and virtual machines.

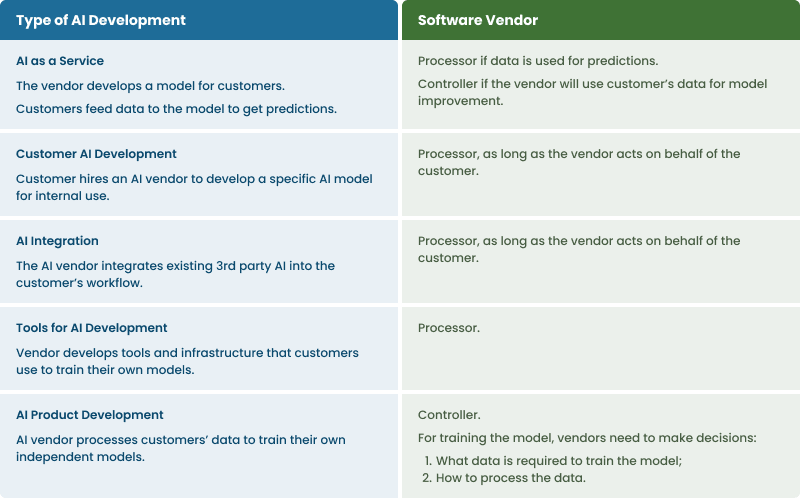

What does GDPR mean for AI compliance? The important part is to determine whether your AI vendor will be a controller or a processor.

Companies working with GDPR are used to granting the processor role to software vendors. However, AI vendors will often need the controller role.

Let’s break down some examples:

How to Handle Privacy in Other Countries?

Here’s the trick: make your privacy governance principle-based. If you base your privacy on principles, it’ll be much easier to adjust to many specific regulations.

In most countries, regional regulations are in some way similar to GDPR. There are differences, but they still have a lot in common. For example, while GDPR describes Controllers and Processors, California’s CCPR has similar roles named Businesses and Service Providers.

To give you an idea, here are some privacy regulations around the globe:

In the USA, there is no federal data protection regulation. Instead, states pass legislation individually. There are California’s CCPA, Colorado’s CPA, New York’s NYPA, and so on.

A federal privacy act called ADPPA has been introduced, but not yet approved.

In China, there’s PIPL (Personal Information Protection Law). For foreign companies, the most important part is cross-border data controls. There are strict rules on transferring private data of out China.

Under PIPL, there are three mechanisms for exporting personal information out of the PRC:

- Security Assessment;

- Certification;

- Standard Contract.

Yet, the original requirements lacked clarity and were difficult to achieve overall.

In 2023, China eased cross-border data controls in Draft Provisions. Now, there’s a new threshold for security assessment. If a company transfers data of under a million individuals, there’s no need for a security assessment. Here’s the new threshold:

- > 1 million individuals. Requires security assessment;

- 10,000-1 million. Requires security certification or entering a standard contract published by Cyberspace Administration of China (CAC);

- < 10,000 individuals. No required transfer mechanism.

Still, all important data transfers require a security assessment. At first, the definition of “important data” was murky. Now, companies can presume that they don’t possess important data unless they were informed otherwise by regulators or through a public notice.

Other countries have their own rules. In Canada, there’s PIPEDA. In Brazil, there’s LGPD. In Japan, there’s APPI. Be aware of them before entering a local market.

Information Security: SOC 2 and ISO 27001

These standards protect sensitive information. They both control processes, policies, and technologies in an organization.

The standards have their differences. ISO is international, while SOC 2 is only popular in North America. SOC 2 applies only to service companies, while ISO 27001 can apply to any organization.

Neither SOC 2 nor ISO 27001 are legally required. Yet many customers demand compliance from their software vendors.

SOC 2 is a security compliance report designed for companies that handle customer data, be it cloud development or machine learning development.

As a customer, you don’t have to go too deep, but check if your vendor complies with SOC 2. The report revolves around 5 Trust Service Criteria, but only security is obligatory.

- Security. Ensuring protection against unauthorized access and data breaches. Again, this criterion is required for all SOC 2 reports;

- Availability. Ensuring that applications are accessible and operational;

- Processing Integrity. Verifying that AI processes are accurate, complete, and timely;

- Confidentiality. Safeguarding sensitive data from unauthorized disclosure;

- Privacy. Managing personal information in accordance with privacy policies and regulations.

SOC reports come in into two types:

- Type I is short-form. It assesses how organizations handle control at a specific point;

- Type II is long form and can cover over a year. The actual audit usually takes only a few weeks but covers the entire period.

ISO 27001 is another standard for information security. It checks compliance of your Information Security Management System (ISMS). This means, your software vendor will need to establish an ISMS.

The goal of an ISMS is to protect three aspects of data. Unlike SOC 2, ISO 27001 requires compliance with all 3:

- Confidentiality. Only authorized persons have the right to access information;

- Integrity. Only authorized persons can change the information;

- Availability. The information must be accessible to authorized persons whenever it is needed.

US Industry-specific Regulations

Critical industries have their regulations, too. Here are just a few of the US industry-specific laws:

In Healthcare, HIPAA is the most important regulation. It makes sure that your health information is kept private and secure. It sets rules for doctors, hospitals, and others about how they can use and share your health details.

HIPAA also gives you the right to control your health information and limits how your information can be shared without your permission.

In education software development, the most important laws for AI developers are FERPA and COPPA. Read more in our article about PII Security in education.

FERPA limits how public entities can access educational information and records.

COPPA is a privacy law. It focuses on online services that collect personal information from children below 13 years old.

Apply AI in EdTech responsively with Aristek

Our software solutions are developed under all the necessary regulations, whether our customers opt for off-the-shelf or custom ones.

In Finance, GLBA is a crucial regulation. It has guidelines for banks and financial institutions, outlining how they can use personal financial details.

GLBA also provides you with rights to control your financial information and places restrictions on how this information can be shared without your consent.

How to Handle AI Compliance in 2024

AI Regulation is coming, so companies better get prepared. For now, focus on the privacy protections like GDPR or CCPA.

Here are the key steps for handling AI compliance:

- Establish a clear system of compliance policies. With so many laws, it’s easy to get lost – so keep things organized. Understand what markets will you be in and single out their local privacy and AI regulations;

- Map your compliance. Compliance mapping software helps you keep track of regulations, both old and incoming ones. Getting such a solution will simplify compliance;

- Hire a vendor that takes compliance seriously. For example, at Aristek Systems we develop AI solutions with a heavy focus on security and compliance. Want to learn more? Reach out for a free consultation.