The rapid adoption of AI has made its way into almost every industry. But with that rise comes an equally fast-growing list of myths. Some are harmless. Others lead to real risk, especially when they influence how organizations approach security.

Maybe this is just the noise? Not really.

When it comes to implementation, the concerns are real. For example, 81% of U.S. executives say cybersecurity is their top challenge with generative AI. Right after that comes data privacy at 78%. Globally, 42% of North American organizations and 56% of European ones rank privacy and data leaks as the primary AI-related risks.

This is not a bad thing. If a company worries about security, it means it understands what’s at stake: customer trust, business continuity, legal compliance. That’s a healthy instinct.

But concern without clarity opens the door to confusion. And confusion is exactly where myths thrive, shaping decisions, slowing down projects, hindering innovations, or leading teams in the wrong direction.

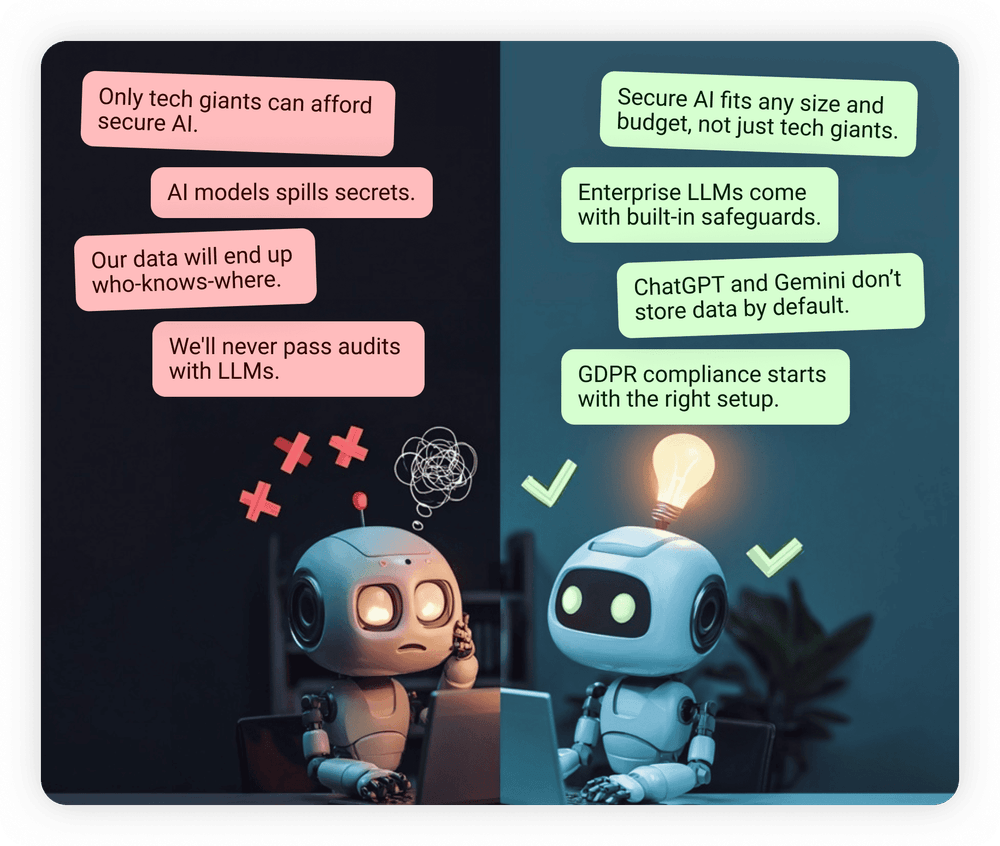

Our AI and data science expert, Viktoryia Makarskaya, has gathered the most common myths about AI and LLM security that she hears from clients. Below, she breaks down five of the most persistent ones and explains why they don’t hold up.

– LLMs constantly hallucinate and give incorrect information, so they can’t be trusted in business processes

Large language models are known to “hallucinate” – generating statements that sound plausible but are factually incorrect. For many organizations, this is reason enough to keep them away from anything that touches core operations. If the tool can’t reliably tell the truth, why risk using it in business-critical workflows?

The fear is simple: one wrong output, and you’ve got misinformation spreading through internal systems or going out to customers.

– Yes, hallucinations happen, but that doesn’t mean LLMs are unreliable in business settings.

Let’s unpack that.

1. Quality standards are the backbone

Enterprise-grade models like ChatGPT and Google Gemini are developed under strict quality standards. For instance, OpenAI complies with SOC 2 Type I and II, which set requirements for security and quality control. Gemini is built on Google Cloud, which is certified under ISO 27001 and SOC 2. These aren’t just badges, they mean the models are developed, tested, and updated under structured and verified processes.

2. There’s a second layer of control

Beyond that, companies don’t use LLMs as-is. You can layer them with your own business logic, filters, and validation rules. It’s possible to define exactly what kind of output is allowed, and set up checks that catch irrelevant or incorrect results. This isn’t optional; it’s a standard part of deploying LLMs in production.

3. Ongoing updates keep improving accuracy

Monitoring also plays a role. Regular testing helps detect inconsistencies early, while updates from providers continuously reduce risks. Both OpenAI and Google ship frequent patches that improve model reliability. Newer versions don’t just perform better; they’re also more stable in real use cases.

4. Hallucinations can be useful in the right context

Not all hallucinations are mistakes. In creative tasks, like writing marketing copy, generating product descriptions, or brainstorming concepts, controlled hallucination is actually helpful. It enables new ideas and variety, which are often the goal in these use cases. The key is knowing when you need factual precision, and when you want creativity.

– Using LLMs means exposing sensitive data and violating security or privacy standards

The fear here is straightforward: once you start using an LLM, your data is exposed. Maybe it’s being sent to a third-party server. Maybe it’s being stored without your knowledge. Or maybe it’s training the model without your consent.

In any case, for companies dealing with sensitive data, such as customer records, financial details, internal IP, this risk feels unacceptable. If compliance is a legal obligation, not a preference, even small uncertainty looks like a deal-breaker.

– This one comes up a lot. But enterprise LLMs are built with compliance and data protection in mind.

1. Certifications prove the model is under control

ChatGPT (OpenAI) follows globally recognized security standards including ISO 27001 and SOC 2. These aren’t internal claims; they require regular audits by independent experts who verify that data handling, access controls, and infrastructure meet defined security benchmarks.

Google Cloud, which powers Gemini, goes even further with certifications like PCI DSS, HIPAA, and FedRAMP, covering everything from healthcare to government-grade environments.

2. Deployment can happen inside your perimeter

LLMs don’t have to run in the public cloud. For industries with strict data localization or internal security policies, companies can deploy open-source models like Llama or Mistral in private clusters or on-premise environments. While GPT and Gemini are typically hosted by their providers, self-hosted LLMs can offer similar capabilities with full control over infrastructure and data.

3. Fine-grained access control is built-in

Just like any secure system, enterprise LLM setups support detailed access control. Role-based access (RBAC), IP restrictions, encrypted communication channels – all of these are standard tools. Companies can define exactly who has access, when, and from where. Nothing gets through without passing established policies.

4. You can filter requests before they reach the LLM

Another layer of safety comes from controlling how users interact with the model. Instead of sending data directly to an external API, companies can route all requests through an internal web service.

This acts as a gatekeeper: if a request includes sensitive information, like personal identifiers or financial details, it can be blocked before ever leaving the secure network. This setup makes it easier to ensure that no private or regulated data leaks by accident.

AI doesn’t have to be a security risk

Our white paper outlines how to build LLM-powered tools with security, governance, and auditability from day one. Download it for free to see what a real defense-in-depth strategy looks like in practice.

– Using ChatGPT or Gemini is against GDPR and other data protection laws

It’s a common assumption: if you send any data to a third-party AI provider, you’re automatically violating data protection laws.

For companies operating under GDPR or sector-specific regulations, this fear feels justified. Sensitive data includes everything from names and emails to transaction histories and patient records, and sharing it, even unintentionally, can have legal consequences. The logic is simple: if LLMs handle user data, they must be non-compliant by default.

– GDPR compliance is serious, but that doesn’t mean you can’t use LLMs. It just means you have to use them correctly.

1. Data isn’t stored by default

OpenAI offers a setting where API data is not used to train models, and data is not stored permanently. Enterprise customers have control over this and can ensure no personal or sensitive information is retained. Similarly, Google Gemini for Workspace and enterprise settings provides configuration to avoid data retention for prompts.

In both cases, customer data is kept private unless explicitly opted in for training. No silent data hoarding.

2. Privacy by design isn’t optional. It’s built in

GDPR’s key principle of Privacy by Design and by Default requires that systems minimize data exposure from the start. Both OpenAI and Google claim to follow this principle in their enterprise offerings, embedding default safeguards that reduce the risk of data misuse.

This includes features like session-based processing, data expiration, and user consent requirements.

3. Anonymization adds another layer of protection

Before sending any input to an LLM, companies can preprocess data, replacing personal identifiers with pseudonyms or anonymized placeholders. This is a common practice in regulated industries like healthcare (under HIPAA) or finance (under PCI DSS).

It ensures that even if data is processed externally, no personal or sensitive identifiers are exposed.

4. On-premise and regional deployment are possible

For companies with strict data residency requirements, there’s the option to host open-source models or inference layers in private environments. This includes on-premise servers or regional cloud infrastructure, which is critical in jurisdictions with local storage laws (such as Germany under the BDSG).

GPT and similar proprietary models remain cloud-hosted, but self-hosted alternatives let companies retain full control over where and how data is processed.

– LLMs can be easily “pumped” with malicious prompts that reveal confidential information

There’s a growing concern that large language models can be manipulated. The idea is that if someone crafts the right prompt – often called a “jailbreak” – they can trick the model into leaking sensitive data or bypassing built-in restrictions.

For companies considering LLMs in internal tools or customer-facing apps, this risk can seem like a showstopper. If an attacker can ask the right question and extract private information, then why trust the system at all?

– Yes, prompt injection is a known threat. But no, it doesn’t mean your LLM-based app is wide open.

In secure environments, there are multiple layers that block such attempts.

1. Built-in moderation and prompt-level filters

Both OpenAI (ChatGPT) and Google (Gemini) apply robust content moderation tools to filter out malicious inputs. Attempts to extract sensitive or restricted information, like asking the model to impersonate a system, or reveal proprietary content, are flagged and blocked.

2. Sensitive data never reaches the LLM

In well-designed systems, private or sensitive data is filtered, masked, or handled outside the LLM context. This means the model doesn’t have access to confidential information in the first place and therefore cannot leak what it hasn’t seen.

3. Certifications come with security requirements

The underlying infrastructure also complies with standards like SOC 2 and ISO 27001, which don’t just address data handling, but also include mandatory vulnerability and incident response frameworks. These frameworks are built to prevent and respond to manipulation attempts.

4. Monitoring and audit logs are part of the package

LLM systems log every interaction. This means that if someone tries something shady, there’s a trail. Suspicious behavior, such as unusual input patterns, high-frequency requests, or repeated prompt injection attempts, can be flagged, investigated, and blocked.

These logs can also be integrated with SIEM platforms like Splunk, IBM QRadar, or Azure Sentinel, giving security teams full visibility across tools.

5. Automated controls block mass abuse

To prevent brute-force prompting or bot-driven probing, LLM platforms use rate limits, anomaly detection, and session monitoring. If something smells like an attack – too many requests, strange formatting, or high-risk terms – it can be throttled or cut off automatically.

Need to tighten up your AI setup?

Whether you’re building from scratch or fixing what’s already running, we help turn LLMs into secure, business-ready tools. Our methods are proven, our team is ready.

– Secure LLM implementation and support are too complex and expensive for most companies

Deploying AI securely sounds like something only tech giants can afford. Between compliance, infrastructure, integration, and ongoing support, many organizations assume that the cost and effort will outweigh the benefits, especially if they don’t have large internal AI teams or unlimited budgets.

In this view, secure LLM adoption is seen as slow, risky, and out of reach for most companies.

– You don’t need a massive budget or team to deploy AI securely.

Let me walk you through what’s actually needed.

1. Deployment can start small – and scale with need

Secure AI adoption doesn’t require building custom infrastructure from day one. Companies can start with hosted APIs, like OpenAI’s ChatGPT or Google’s Gemini in Google Cloud. These platforms are already certified under standards like ISO 27001 and SOC 2, so baseline security and compliance are covered from the start.

For organizations with stricter requirements, on-premise or private cloud deployments are available, but those are not mandatory for most use cases.

2. LLMs automate high-cost, low-value tasks

One of the clearest returns comes from automating repetitive work – internal support, data entry, reporting, customer service triage, and more. These aren’t hypothetical savings. Businesses using LLMs to streamline operations often reduce manual workload and related costs significantly. In many cases, the savings offset infrastructure investments within the first year of deployment.

3. Integration is not a custom build

Connecting LLMs to internal systems doesn’t mean writing code from scratch. Both OpenAI and Google provide mature SDKs, APIs, and plugins that work with widely used platforms like CRMs, ERPs, ticketing systems, and data tools.

This reduces the time and risk involved in deployment, including risks related to security misconfiguration.

4. Certification overhead is lower than it used to be

Much of the foundational compliance work, like infrastructure certification and audit readiness, has already been done by the providers. Companies using OpenAI’s or Google’s enterprise offerings inherit these assurances. That reduces the burden of conducting audits or building compliance from scratch.

Bottom line

At Aristek, we build AI systems with security at the core. From the first design phase to deployment and beyond, we apply proven practices to keep your data protected, your processes compliant, and your implementation aligned with business needs.

Already working with AI? We help organizations reinforce existing systems with controls that are time-tested, auditable, and enterprise-grade. Book a free consultation to learn how we can help your business.